Technical SEO issues are more common than most users expect, but you do not have to hire a team of specialists to resolve them.

Developers and web designers want to assure everything on their website is up and running at optimal levels. Therefore, similarly to your website’s site health, monitoring your website’s SEO is important to keep your ranking growing.

In this article, we will discuss some of the issues that affect your website’s technical SEO and how to find and solve them. Included will also be tools that you can use to better manage and monitor your website’s SEO rank and Domain Authority score.

Domain Authority

Your Domain Authority (DA) is a score for your website created by Moz, which gives you a ranking from 1-100 in order to predict if your site is likely to appear higher in a search engine search.

The score is calculated on different factors; therefore, your domain’s score will fluctuate with time. It uses an algorithm to compare how often Google search uses your domain over a different domain within that same search.

Therefore, if your domain ranks higher than a competitor’s domain, it regards it as having more authority. For example, Amazon will have a higher domain authority than a regular eCommerce website.

Why does Domain Authority matter?

Domain Authority is not a Google ranking factor in and of itself. However, it does provide some insight into your website, its links, and how it compares to your competitors.

You do not have to strive to make your DA 100, as that is the highest it can go and likely very hard to compete with other large companies such as Facebook or, as previously mentioned, Amazon.

For comparison, you can review Moz’s Top 500 Websites.

Webmasters should therefore focus on their links, keywords, and other SEO aspects that will affect their Google ranking.

Backlinks

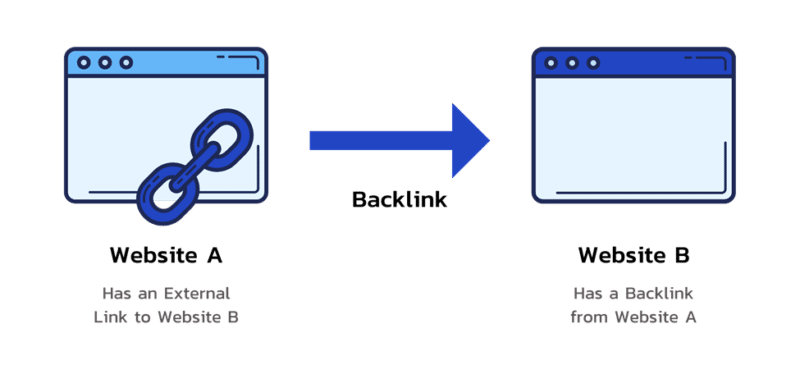

Sometimes referred to as inbound or incoming links, backlinks occur when one website is linked to another.

When your website receives a backlink from an authoritative website, you gain what is known as a “vote of confidence.”

If multiple sources of information link to your website or the same content, SEO regards your content to be of high quality.

However, what happens when links to your website are deemed as “bad” or low-quality backlinks, and what does this mean?

Toxic backlinks

Google defines bad backlinks as:

Any links intended to manipulate PageRank or a site’s ranking in Google search results may be considered part of a link scheme and a violation of Google’s Webmaster Guidelines. This includes any behavior that manipulates links to your site or outgoing links from your site.

This means if any of the backlinks on your website are from a malicious or manipulative source, your SEO will be negatively affected, as it is reflected as bad content.

Google Search Central has further examples and information on what can cause your backlinks to be flagged as toxic or bad content.

How to find toxic backlinks and how to get rid of them

A link analysis is the best way to identify where your backlinks are coming from. You can use Google Search Console to see your entire linking profile; however, for a more in-depth look at your backlinks and their sources, you can use other resources.

Semrush has a Backlink Analysis tool that lets you review your links before making any changes. It is to be noted that Semrush is a paid resource.

In order to address toxic backlinks, you can put in a request in Google Search Console to Disavow them. This lets Google know to ignore the links and not to count them against your SEO score.

Disavowing backlinks is a resource that should only be used if the links to your website are actively targeting your site and bringing your rank down.

Valid markup

Although drag and drop builders are amongst the most popular ways to design a website today, all sites are still made out of code, and this will also be reviewed by search engines.

W3C coding standards

The World Wide Web Consortium (W3C) is an international community that works together to develop Web standards.

It offers free access to these standards as its principle is Web for all, Web on everything. This means all sites should be accessible to users across browsers and devices.

What do coding standards have to do with SEO?

These standards assure that your content is available everywhere, which means if your code is not validated by these rules, it might mean there will be cross-compatibility issues and errors that can negatively impact your SEO.

Validating your code per W3C standards does not affect your SEO, but it can resolve a couple of issues, including:

- Malformed code

- Content rendering

- Microdata markup

- Heading structure

It is possible to validate your code in a variety of ways. Including URL, file upload and direct input on the W3C website.

Having your code validated will also assure you that you are available across the globe and platforms, reduce code bloat, and create a better user experience overall. All of which will contribute to better SEO management.

Other factors that might affect your technical SEO

The following are some further issues you might encounter after auditing your website.

- Resources blocked by robots.txt – If you block CSS or JS here, search engines cannot render your content.

- Broken links – Not only are broken links bad for the user experience but because your website is setting outbound links to pages that do not exist anymore or are misspelled, it reflects as bad content on your website.

- Missing images – Similarly to broken links, missing images occur when the image that was in place on a page was removed, or its link updated. Ensure your images are up to date and optimized for search engines.

- Server errors

- 404 not found – per Google, there are a variety of ways a 404 page could affect your website. Having multiple 404 pages with no 301 redirects or custom 404 pages can reflect bad content or issues with your links as they oftentimes will continue to be crawled.

- 301 redirect loops – this error occurs when one of your links redirects to a new website, that in turn continues to redirect it. As Google Search engine cannot process your final link, it is not able to gather information from that page. Ensure to update your links to point to the correct destination to eliminate the need for a 301.

- Mixed content warnings – Mixed content happens when an HTTPS page contains HTTP elements. Non-secure HTTP elements mean a page is not secure and could be attacked, negatively affecting your SEO.

Further resources

There are multiple tools to check your SEO standing and how to improve it. These are just some of our recommendations.

- Backlinko is a great resource for SEO training and link-building strategies.

- Google Core Web Vitals is one of the most thorough tools to check your site speed and what might be affecting it.

- Google Search Console is Google’s free tool for link and page checks and much more.

- ScreamingFrog is desktop-based software that crawls your entire website and integrates with data from your Google services. The audit scans for broken links, duplicate content and server errors amongst technical data points.

- SEORCH can give you vital information on your keyword density, heading structure, and code-to-text ratio.

- Semrush is a paid resource with incredible tools that help you understand your links, keywords, ranking, and more. They also offer courses, e-books and community support.

Final words

Technical SEO can be intimidating for some users as it is not as readily available to change as general SEO modifications. Oftentimes it requires changes to code, site architecture and the web server in order to resolve issues.

Keeping an eye out for these issues with consistent monitoring and full site audits helps you catch issues early on. Using tools like Semrush and Google Search Console will help your site grow not only its rank but also its Domain Authority.

If you are interested in learning more about improving your SEO ranking and optimizing your website, amongst other things, check out InMotion Hosting’s blog.

The post Top technical SEO issues every webmaster should master appeared first on Search Engine Land.

Source: Search Engine Land