Any e-commerce site is probably familiar with the “URL Parameters Tool.” This is a feature in Google Search Console that SEOs have long used to help control the crawl of their websites. In this tool, you inform Google of what your different URL parameters do and how Google should crawl them (“Let Googlebot Decide”, “No URLs” etc). Google has provided extensive documentation on the different settings that can be configured and how the crawl commands interact with each other.

However, recently Google has moved this tool to the ambiguous “Legacy tool and reports” section. Ever since that time, I’ve wondered what that meant for the tool. Is this just a way of categorizing an older feature? Does Google plan on sunsetting it eventually? Does Google even still use the commands here?

Something else I’ve found interesting is that when reviewing client log files, we’ve encountered examples where Google didn’t appear to be abiding by the rules set in the URL parameter tool.

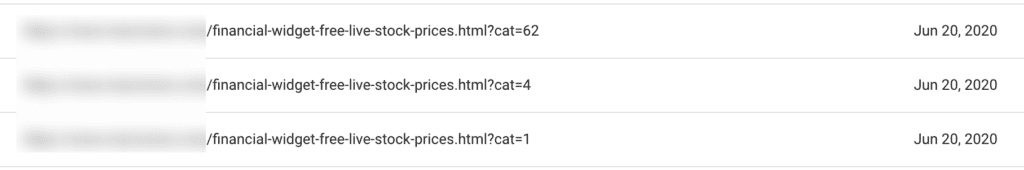

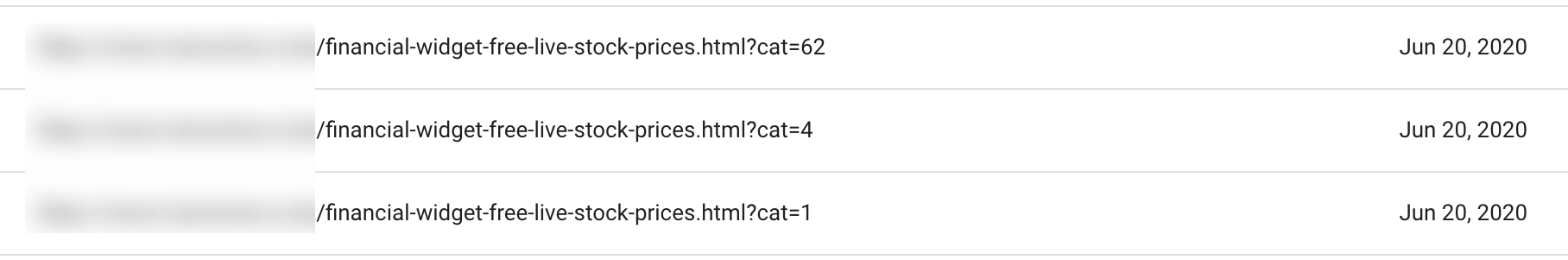

To find out more, I decided to perform a test. I took one of our test sites and found URL parameters that Google was crawling. Using Google’s Index Coverage report, I was able to confirm Googlebot was crawling the following parameters:

?cat

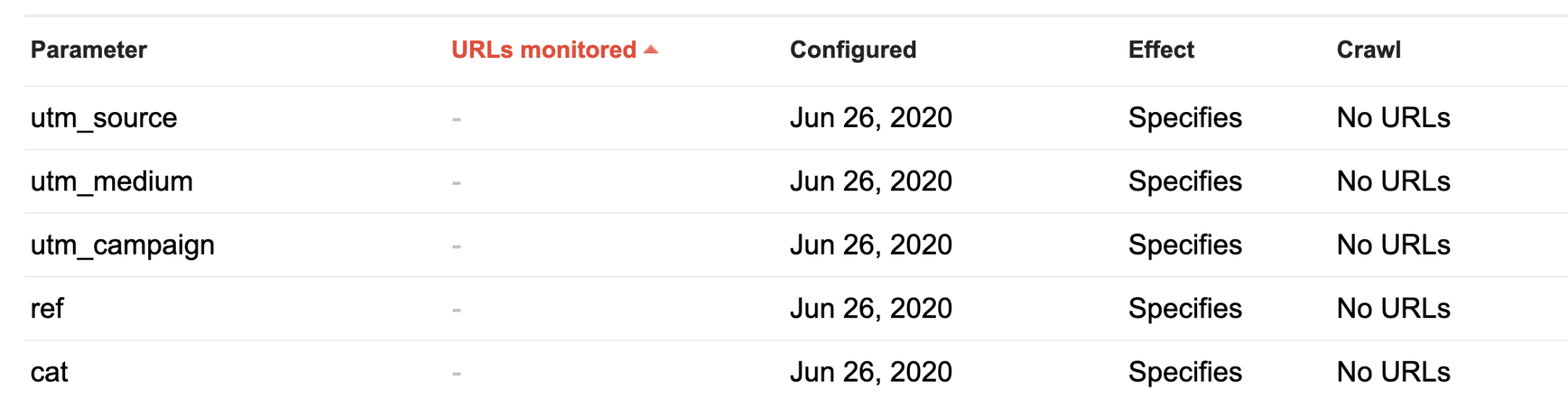

?utm_source

?utm_medium

?utm_campaign

?ref

On June 26, I went ahead and added these URLs to Google’s URL parameters report. I instructed Googlebot specifically to crawl “No URLs.”

I then waited and monitored Google’s crawl of the site. After collecting a couple of week’s worth of data, we can see that Google was still crawling these URL parameters. The primary parameter we were able to find activity on was “?cat” URLs:

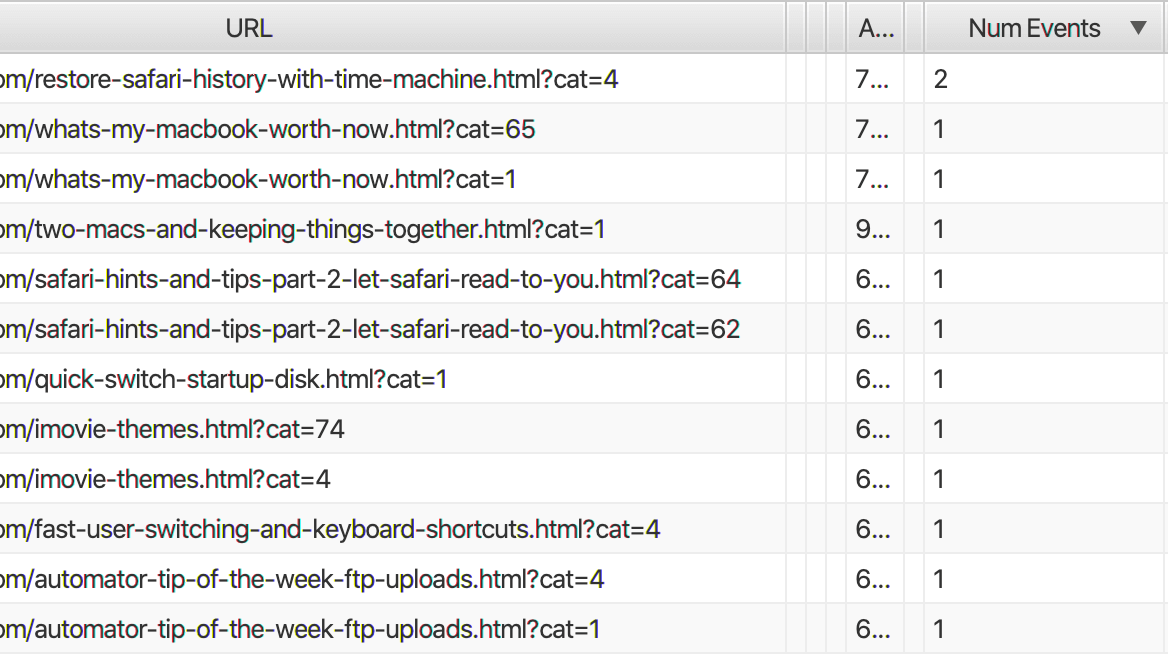

Zooming out a bit further, you can see that these are verified Googlebot events that occurred on June 27 or later, after the crawl settings had been configured:

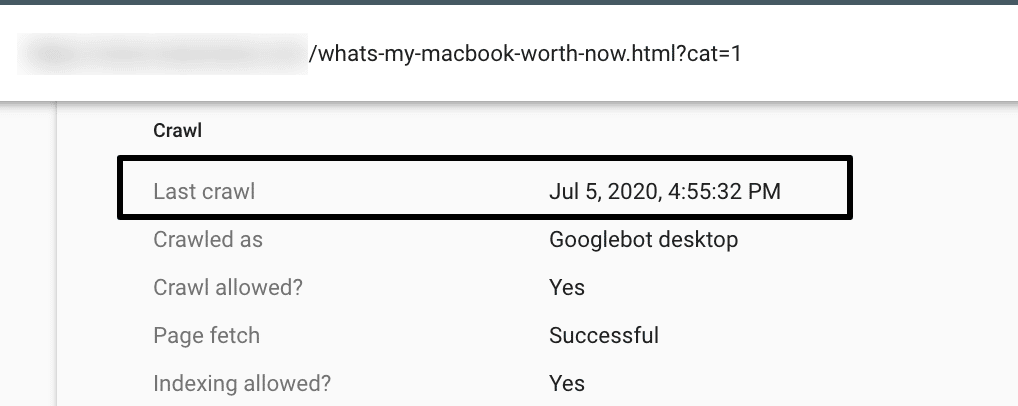

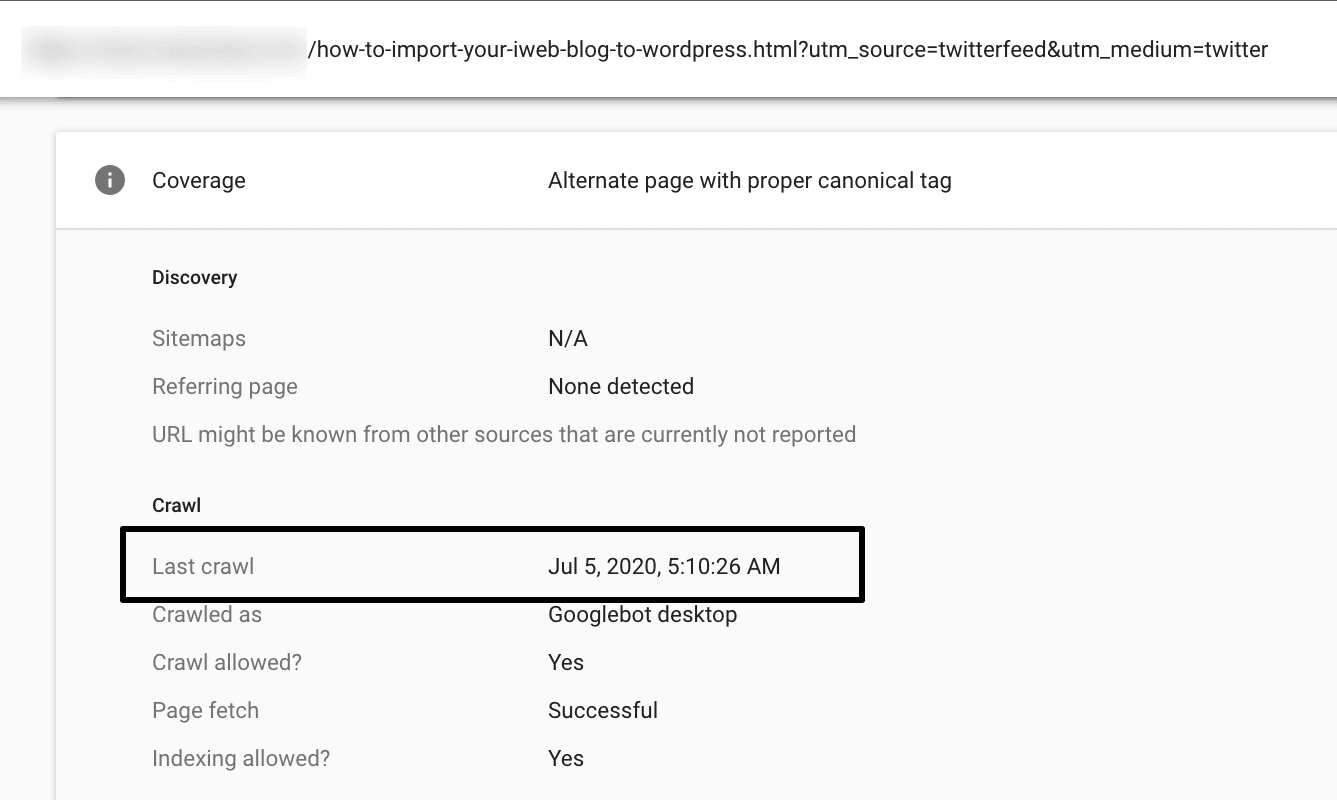

We were also able to confirm crawl activity of both “?cat” and “?utm” URLs using Google’s URL Inspection Tool. Notice how the URLs had “Last crawls” after the new rules went into place.

What does this mean for SEOs?

While we’re not seeing overwhelming crawl activity, it is an indicator that Google might not always respect the rules in the URL Parameters tool. Keep in mind that this is a smaller site (around 600 pages) so the scale in which these URL parameters will be crawled is much lower than a large eCommerce site.

Of course, this isn’t to say that Google is always ignoring the URL parameters report. However, in this particular instance, we can see that it might be the case. If you’re an e-commerce site, I would recommend not making assumptions about how Google’s crawling your parameters and check the log files to confirm crawl activity. Overall, if you’re looking to limit the crawl of a particular parameter, I’d rely on the robots.txt first and foremost.

The post Does Google respect the URL parameters tool? appeared first on Search Engine Land.

Source: IAB